Introduction

As artificial intelligence becomes more integrated into our daily lives, the issue of bias in AI systems has gained increasing attention. Generative AI, with its ability to create new content from existing data, presents an exciting frontier in fields ranging from art and music to natural language processing and design. However, as these models are trained on vast amounts of human-generated data, they often inherit the biases embedded in that data. Overcoming bias in generative AI models is a critical topic covered in any Generative ai course. It takes both, technological and ethical solutions, to ensure that AI systems are fair, equitable, and beneficial for all.

Understanding Bias in Generative AI

Bias in AI occurs when a model’s predictions or generated content disproportionately favour or disadvantage certain groups based on characteristics such as race, gender, or socioeconomic status. This bias arises because AI models are trained on datasets that reflect human history, culture, and behaviour, all of which are often steeped in historical inequalities and systemic biases. Choosing the right datasets for training AI models is the first step towards avoiding bias as you will learn in any Generative ai course.

Generative AI models, such as those used to create text, images, or music, are particularly susceptible to bias because they rely on large-scale datasets to generate content. Thus, if texts contain biased language or reflect cultural stereotypes, the AI is likely to reproduce those biases in its output.

Common Challenges in Addressing Bias

Here are the common causes and sources of bias in generative AI models. An inclusive Generative ai course will not only create awareness among learners about the impact of biases but also train them to avoid them as a matter of professional policy.

- Bias in Training Data: The most fundamental source of bias in generative AI models is the training data itself. AI models are only as good as the data they learn from, and if the data is biased, the model’s outputs will reflect that bias. For example, if a text-generating model is trained predominantly on English-language texts from Western countries, it may underrepresent or misrepresent cultures and languages from other parts of the world.

- Bias in Model Architecture: While bias is often rooted in the data, the architecture of the AI model itself can also exacerbate the problem. Some AI models may amplify certain patterns in the data more than others, leading to an over-representation of biased information. For example, a model may favour frequent patterns in the data, such as associating certain job roles with particular genders, thereby reinforcing societal stereotypes even if the training data contains some diversity.

- Bias in Human Intervention: Human developers and engineers play a significant role in the creation and fine-tuning of AI models. Decisions about which datasets to use, how to preprocess the data, and how to define success for the model can all introduce bias. Additionally, bias can arise from subjective judgment of what determines bias.

- Bias in User Interaction: Generative AI models often interact with users in real-time, generating content based on user prompts. These interactions can introduce new biases if the model adjusts its outputs based on biased or harmful input from users.

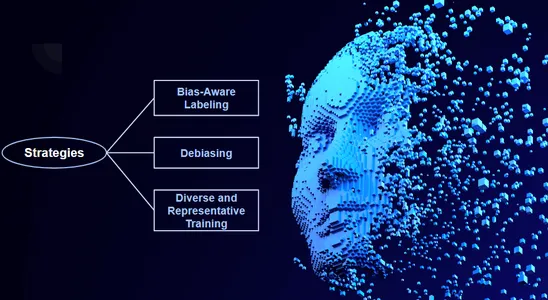

Solutions to Overcoming Bias in Generative AI

Addressing bias in generative AI models requires a multifaceted approach, combining improvements in data collection, model architecture, and ethical guidelines for AI development. Some potential solutions often taught in an inclusive course such as an ai course in Bangalore include:

- Diverse and Representative Training Data: One of the most important steps in reducing bias in generative AI is ensuring that the training data is diverse, inclusive, and representative of different cultures, identities, and perspectives. This means curating datasets that are balanced across gender, race, age, geography, and other characteristics. Additionally, data augmentation techniques can be used to artificially expand the diversity of training data by generating variations of existing data points. This can help ensure that the model encounters a more balanced representation of different groups during training.

- Bias Detection and Mitigation Techniques: Bias detection tools can help identify problematic patterns in the model’s outputs. These tools can analyse the model’s behaviour and detect whether certain groups are being disproportionately favoured or disadvantaged. Once bias is detected, bias mitigation techniques can be applied to reduce its impact.

- Explainability and Transparency: Transparency is key to understanding and addressing bias in AI systems. Explainability tools can provide insights into how a generative AI model arrives at its decisions or generates specific outputs. By understanding which factors the model is prioritising, developers can identify potential sources of bias and make targeted adjustments to the model’s architecture or training process.

- Human-in-the-Loop Approaches: Incorporating human oversight into the AI development process can help catch and correct biased outputs before they reach end-users. Human-in-the-loop (HITL) systems involve humans working alongside AI models to review, adjust, and approve outputs. This collaborative approach can be particularly useful in creative fields, where biases in generated content may be subtle or difficult for an automated system to detect on its own. HITL systems can also be used to provide real-time feedback to the model, allowing it to learn from human input and adjust its behaviour over time.

- Ethical AI Development Standards: Finally, addressing bias in generative AI requires a commitment to ethical AI development. Many organisations are already developing AI ethics frameworks that prioritise reducing bias and promoting fairness in AI applications. These frameworks can help ensure that AI developers are held accountable for the biases in their models and are actively working to minimise harm.

Conclusion

Overcoming bias in Generative AI models is a complex challenge that requires collaboration between AI developers, data scientists, ethicists, and policymakers. While biases in AI reflect the larger societal biases embedded in our data, they also provide an opportunity to address these inequalities through thoughtful design and innovation. By using diverse datasets, developing bias detection tools, implementing human-in-the-loop systems, and adhering to ethical AI standards, we can work towards creating fairer, more inclusive AI systems that serve the needs of all individuals. All AI professionals are generally required to consciously overcome bias in their models and algorithms. The course curricula of an ai course in Bangalore and such reputed learning centres will have lessons in overcoming bias as mandatory topics.

For More details visit us:

Name: ExcelR – Data Science, Generative AI, Artificial Intelligence Course in Bangalore

Address: Unit No. T-2 4th Floor, Raja Ikon Sy, No.89/1 Munnekolala, Village, Marathahalli – Sarjapur Outer Ring Rd, above Yes Bank, Marathahalli, Bengaluru, Karnataka 560037

Phone: 087929 28623

Email: enquiry@excelr.com